17.6. MapReduce and Data Parallelism

Friday, July 14, 2017

Training seti kullanılacak çekirdek/bilgisayar sayısına göre bölümlendir, bu bölümleri çekirdeklere/bilgisayarlara dağıt ve hesaplamaları gerçekleştir, sonuçları birleştir.

Üstteki örnekte m=400 olan dataseti 4 parçaya ayırıp her bir parçayı farklı bir makinede işliyoruz. Böylece teoride 4 kat daha fazla performans elde etmiş oluruz.

If you are thinking of applying MapReduce to some learning algorithm, the key question you need to answer is this:

Can your learning algorithm be expressed as a summation over the training set?

It turns out that many of the learning algorithm can be expressed as sums of functions over the training set.

17.4. Stochastic Gradient Descent Convergence

Friday, July 14, 2017

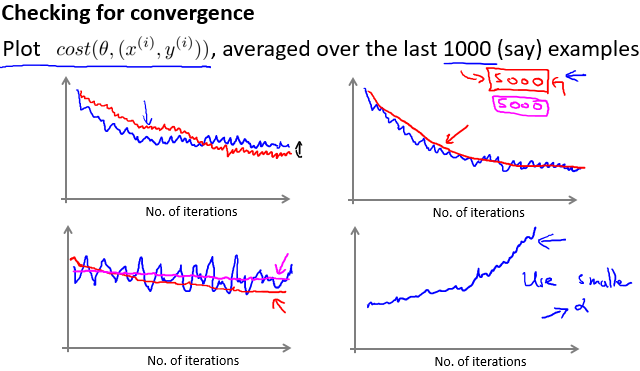

How do you make sure that the algorithm completely debugged and converges okay?

How do you tune the learning rate alpha?

When we have huge data set of 300,000,000 training examples, we just can’t calculate the cost for given theta parameters to debug our algorithm like the way we did before when we had much smaller data set. Doing so would hurt the performance. And the practice by itself is contrary to the idea of Stochastic Gradient Descent: SGC does not iterate through all the training data.

I.

The red line represents an algorithm with smallar learning rate compared to the blue one. We may end up with slightly better values for theta parameters, therefore better hypthesis func, as represented by the red line. We know that Stochastic Gradient Desc. does not just converge to the global minimum like Batch GD does. What happens in the SGD scenario is that the parameters oscillate a bit around the global minimum. So with smaller learning rate, we’ll have smallar oscillations.

II.

Increasing the number of the examples we average over may give us a smoother curve. The disadvantage is that it causes a delay in the reporting.

III.

If we end up with a highly noisy line like the blue one, we may think that the algorithm is not learning, since the cost does not decreasing. It may be true, but also may not be true. In a situation like this, try to increase the number you average over. We may end up with the red or pink one:

In the case of red line: The algorithm is actually doing good and the cost is decreasing. We just failed to see that the algorithm is doing okay, just because of the highly noisy nature of the line.

In the case of pink line: The algorithm is not learning. We need to change the learning rate or the features, or something else.

IV.

The algorithm diverges. Use a smaller value for the learning rate alpha.

Since decreasing the the alpha value reduces the oscillations, it is possible for Stochastic GD to converge global minimum by slowly decrease the learning rate alpha over time.

You need to handle the const1 and const2 parameters. This makes this approach less popular.

17.3. Mini-Batch Gradient Descent

Wednesday, July 12, 2017

Bay Ng genel olarak b = 10 aldığını söylür.

Genel olarak b, 2-100 arasında değer alır

Having a good vectorized implementation may enable Mini-batch GD to outperform Stochastic GD.

The only way for Mini-batch GD to outperform Stochastic GD is having a good vectorized implementation for the calculation of derivative terms. Vectorized implementation is not only more efficient way of calculation but it also enables you to partially parallelize the computation.

Mini-batch GD is a middle way between Batch GD and Stochastic GD.